Google Analytics is limited.

It is a mere tool of the clickstream. And the clickstream has its limits.

The clickstream measures the degree of interaction on your website. It records how much a user (hopefully human) interacts with your website. It is impossible to derive the kind of visitor interaction, whether it is positive or negative.

Your fan will spend half an hour on your website devouring your content. So will the confused citizen, using your broken search and failing to find what they are looking for. Both will measure a high degree of interaction on your website.

To be effective you must discover the kind of interaction on your website.

One of the most effective ways to do this is through primary market research. Specifically, surveys of your targeted citizens.

The voice of citizens offers insights that you cannot find anywhere else.

Analytics guru Avinash Kaushik said in his book Web Analytics 2.0 that for huge organisations, such as the government, your priority tool to gain insights is the voice of the customer (ahead of measuring outcomes and recording the clickstream).

Surveys are your best friend.

Our recent survey was a great success. We received over 100 responses.

The most common response in the survey was that you want more case studies. And that’s what this article is; one big case study.

It includes our failures in survey design and the lessons you can learn from them.

Ask, “How will I use this information?”

“Is this question useful?”

This is what we asked for every question in the survey.

Inevitably, the response was always, “Yes, of course.”

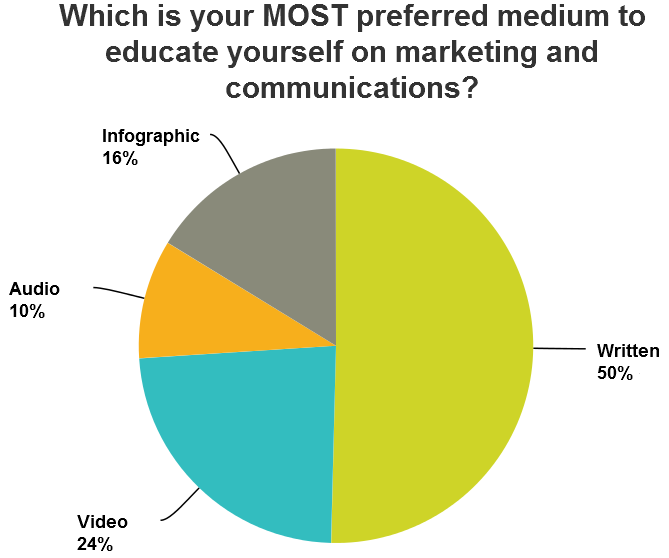

One of our favourite questions was to find your most preferred medium to educate yourself on communications and marketing. Is this question useful? Yes. How will we use this information? We’ll focus our content efforts on your most preferred medium.

Here are the results.

This question gave us multiple bits of actionable knowledge. (Prioritise written; our podcast is the favourite way to learn for some of our audience; video is popular and infographics have their fans.)

The next question we asked you was your “LEAST preferred medium.” Is this useful? Of course. On intuition, this would appear to tell us the same amount of actionable knowledge as the previous question. We would change our content strategy to exile any despised medium.

How would we use this information?

Upon closer investigation, we discovered that we will not exile the least preferred medium.

The least preferred medium was by far audio. Will we scrap our podcast? No. Audio is the most preferred medium for a segment of our audience. It also provides us with other benefits, such as prestige, reputation, building relationships and learning opportunities. For the time invested, it has a great return.

What we failed to ask was “How will I use this information?” In this example, we will not use the information of your least preferred medium.

In your surveys, ask yourself how you will use the information. And be detailed. It’s the details that will tell you if the question is worth asking.

Place harsh limitations on each question

To be vague is the death of insight. (Tweet from your profile now)

We wanted our questions to be specific. And yet we wanted to leave out details that we thought may bias or limit the question. But limits are where you get the answers.

When you want specific answers, ask a specific question. (Tweet now)

We asked you, “What other blogs do you read?” We designed this question to learn your interests, which other marketing blogs you follow and your preference for reading blogs. We can’t all get what we want, eh?

About half of the responses were useful.

We received responses such as “various,” “the ones I have a personal interest in,” and “other content marketing blogs.” These responses were as vague as our question was. How could we expect specific answers? You can’t read our minds.

Our question should have been more direct. We should have split it up into two or more questions. For instance, “What other marketing-related blogs do you read?” and “How many blogs do you follow on a regular basis?”

Place limitations on your questions. To receive useful responses, this is mandatory. Without limits on each question, you receive vague answers.

Sidenote: Occasionally, you want to give the respondent complete freedom of roaming. The vast majority of responses will be of little use, but a minority will provide supreme gold. This is only possible with a 100% open-ended question. We received some enlightening responses to “Do you have any other comments, questions, or concerns?”

Select the right definitions

For any statistic, you must define it.

As much as this sentence seems like a walk in the park, it is the cause of an apocalypse full of problems.

In The Tiger That Isn’t: Seeing Through a World of Numbers, Michael Blastland and Andrew Dilnot put this so poetically:

“The difference is between counting, which is easy, and counting something, which is anything but… That is why, when counting something, we have to squash it into a shape that fits the numbers. (In an ideal world, the process would be the other way round, so that numbers described life, not bullied it.)”

Consider the unemployment rate. When do you call someone unemployed? Must they be entirely unemployed? Can they work an hour a week or two or three? What if they work, but don’t get paid? Do they have to be looking for work? If so, how hard? One hour a week? Two?

Definitions are hard. Ideas are easy, but it’s in the specifics where everything goes grey.

We wanted to find out the quality of the content that we create for you. Our initial question was, “How would you rate the quality of our blog posts?” We reconsidered. (Remember, death of insight.)

We further defined what quality meant. The question became, “How useful do you find our blog posts?” We want you to apply our lessons to your work. We want them to be practical.

We changed all questions about quality to asking about usefulness. We asked you about our blog posts, newsletter, Q&A series and podcast. We thought this definition would suffice. However, we were wrong.

Not only is this question still vague, it can’t be applied to all our mediums.

Our podcast returned as being the “least useful” of all our platforms. Our initial reaction was wallowing in depression. Then we thought about it.

Least useful? The single metric of a successful content marketing podcast for government is usefulness? Not entirely.

Usefulness is ONE important variable in the measurement of our podcast. Usefulness is important, no doubt. (We recorded a nice correlation between perceived usefulness and how often the podcast was listened to.) There are other variables in the equation though.

A successful podcast on content marketing in government is a function of both usefulness and entertainment (amongst others). A regular podcast listener described it as, “I listen to your podcast to be inspired first, then to learn and understand.” Entertainment is required in the podcast medium but less so for text. This is what our definition of success failed to include.

As Michael Blastland and Andrew Dilnot put it, “if it has been counted, it has been defined, and that will almost always have meant using force to squeeze reality into boxes that don’t fit.” (Tweet now)

The Early Bird Catches the Worm

The length of the survey must always be considered.

It is well known that the longer the survey, the fewer responses you receive. Hence why culling useless questions is necessary. (Ask, “How will I use this information?”)

We took the risk to ask you to complete one long survey instead of several shorter ones. We did this because we believed that one large thank-you prize offers more motivation than several smaller prizes. (Think $50 million lottery prizes.)

What we forgot was that there would still be an abandonment rate throughout the survey.

About a quarter of all respondents failed to complete the survey.

This meant that earlier questions had more responses than later questions.

Place the most important questions at the beginning of the survey. This ensures that these questions get answered by the maximum number of respondents.

Order low-to-high

The order of questions and answers affects your results. This is known. It is often the result of framing created by previous questions. Randomisation is used to counter this. However, randomisation removes all biases. But perhaps you want a bias. A bias to complete your survey.

In the scientific paper Order in Product Customization Decisions, psychology researchers found that the number of options each decision has affects results.

In an experiment on the Audi website, researchers found that buyers were much more likely to accept the default option in a high-to-low sequence. This sequence presented decisions from the attribute with the most options (interior colour, 56) to that of the least (gearshift style, 4).

This means that buyers found it more difficult to make decisions. They chose to opt out of the decision and accepted the default. This shows that they became overwhelmed and fatigued from challenging decisions. They were also less satisfied with their own experience than the low-to-high group.

This means your survey should start with simple questions and advance in complexity as the survey progresses.

Do not start your survey with a hard open-ended question. In our survey, the hardest question we asked you was “What is content marketing?” This question required so much effort that many respondents chose to copy the definition, word-for-word, from Wikipedia. This would be a horrendous question to start a survey with.

Start your survey with short multiple-choice questions.

Start simple and proceed in complexity.

The statistician and psychologist

An effective survey is not easy.

You must think like a statistician and be a psychologist.

You must ask the right questions to receive the highest number of useful responses. You must:

- Select the right definitions

- Place harsh limitations on each question

- Ask, “How will I use this information?”

- Put the most important questions first (the early bird catches the worm)

- Order low-to-high in complexity.

You should frequently conduct surveys. Surveys are an important part of not only market research (done prior to the campaign) but are fundamental in the continuous measurement and evaluation of it. This constant feedback will keep you on the right path to successfully communicating with your citizens.

We encourage you to start drafting a survey today.

What will be your first question in your next survey? Tell us in the comments below. Give us some context on the project you’re communicating and your audience.

Great insights found here. Thank you for sharing. Of particular interest to me is the structuring such that more difficult questions are at the end. And always having a bias towards completion of the survey. As well as your thoughts on vagueness.

Appreciate a lot for sharing this information, yes its important to consider all the points that you’ve mentioned to achieve goal while conducting market research. We needed an app using which we can conduct market research & also collect data in Real-Time, the data collected using Brew Survey app are so accurate & easy to extract it in CSV format.